The AI Decision Framework: The Guide to Choosing the Right AI Strategy

By Maher Hanafi, SVP of Engineering at Betterworks. Originally published in Medium on August 1, 2025.

Don’t just pick a model, build a strategy. Move from simple selection to robust planning with this comprehensive 3-layer framework.

As a technology leader who has been leading AI Adoption and Implementation for the last few years, there’s one question I get more frequently than any other, whether it’s from my own team, fellow executives or industry partners: “Which AI model should we use?”

It’s an understandable question. The excitement is high and the market is crowded with powerful options, from OpenAI’s GPT and Google’s Gemini to open-source titans like Meta’s Llama and Mistral. Everyone wants to harness the power of generative AI.

Yet, after navigating this landscape for long enough, I’ve come to believe this is the wrong question to ask first. It’s like asking “Which engine should I buy?” without knowing if you’re building a race car, a cargo ship or an airplane. It’s a dangerously premature question that mistakes a single, flashy component for the entire, complex solution.

The reality is that choosing an AI model is no longer a simple technical decision. It’s a multi-layered strategic exercise that impacts your budget, security posture, brand reputation, and long-term roadmap. To navigate this complexity, you need more than a feature comparison chart; you need a strategic framework.

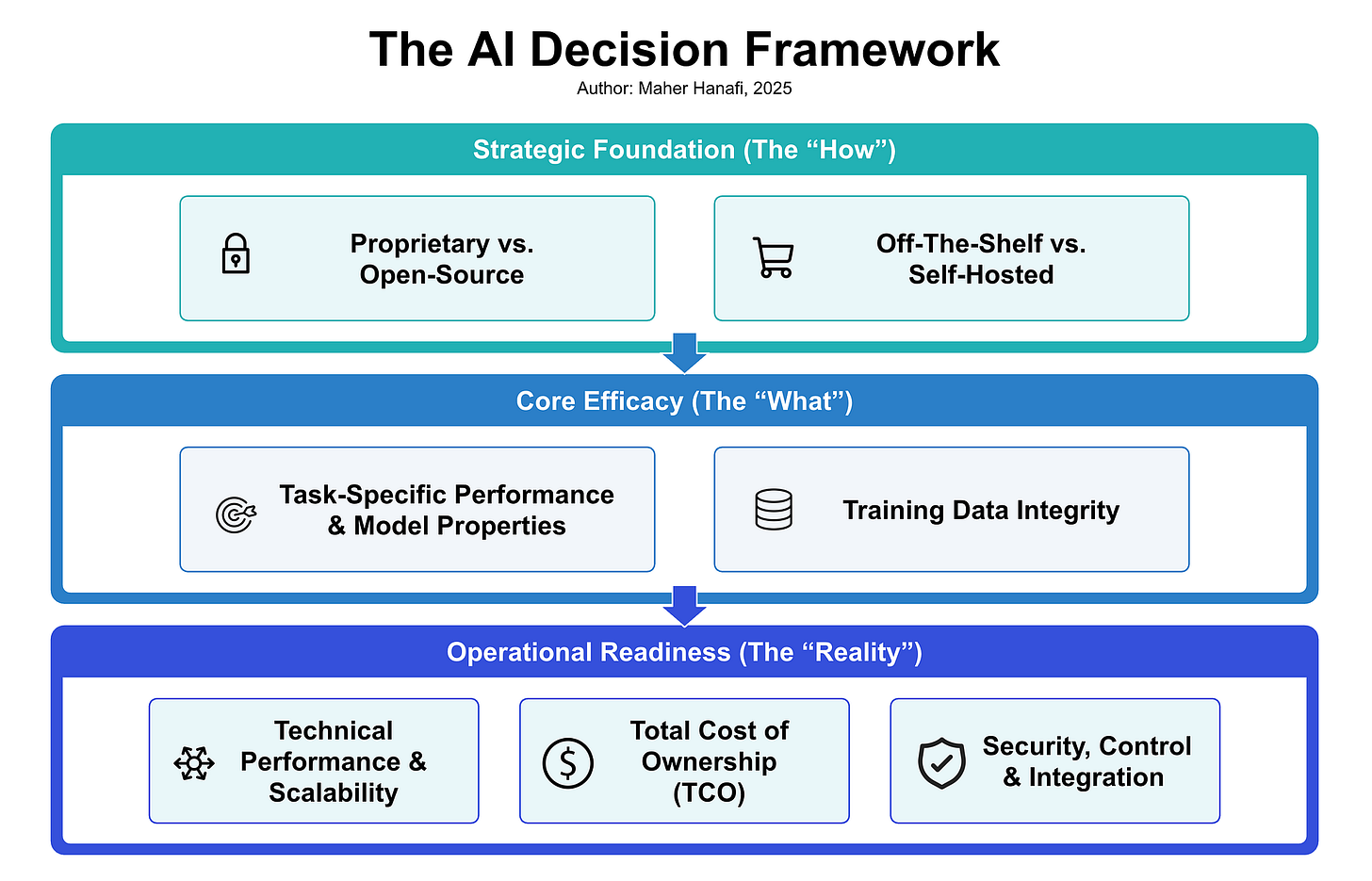

That’s why I developed The AI Decision Framework, a three-layered approach we use to bring clarity to this process. It’s a guide for leaders to move from high-level strategic planning down to the practical realities of implementation, ensuring you build a solution that is not only technically impressive but also secure, cost-effective, and fundamentally valuable to your business.

Layer 1: The Strategic Foundation (The ‘How’)

This first layer is the most critical. Your decisions here will dictate the boundaries for everything that follows. It’s where you define your overall philosophy and approach to using AI in your organization.

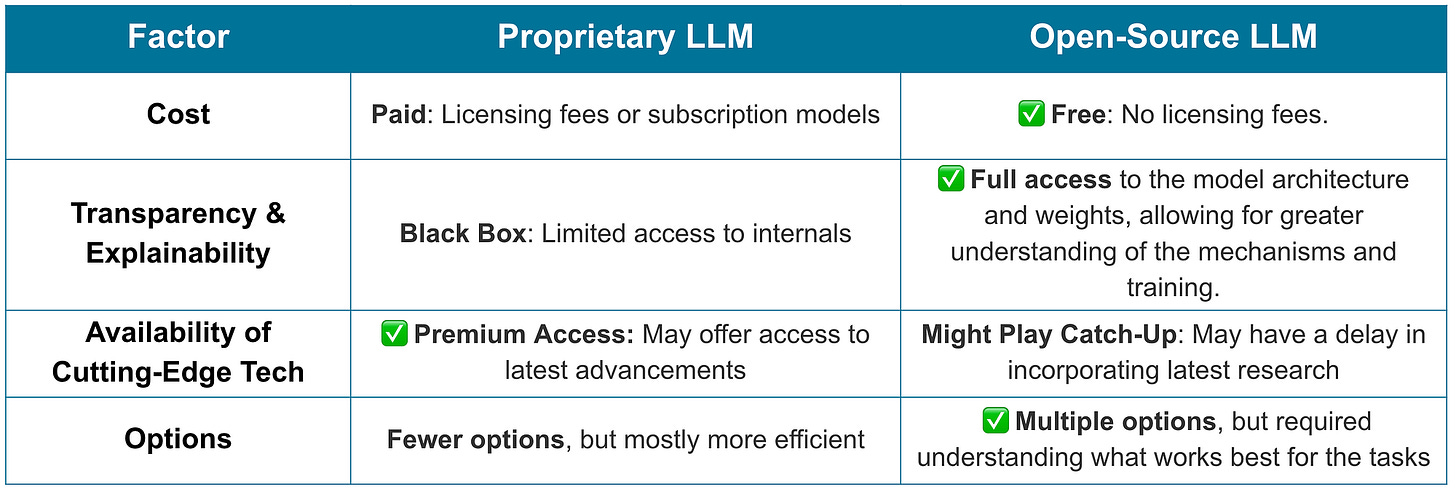

Proprietary vs. Open-Source: The Control and Convenience Spectrum

This is the classic “build vs. buy” dilemma, reimagined for the AI era.

Proprietary Models (e.g., GPT-4, Gemini, Claude): These are commercial, closed-source models offered by large tech companies. They offer access to state-of-the-art technology with the convenience of dedicated support and managed infrastructure. However, this convenience comes at a cost: high licensing fees, a “black box” nature that limits transparency and explainability, and the risk of vendor lock-in. You are fundamentally trusting an external company with a core part of your technology.

Open-Source Models (e.g., Llama 3, Mistral, Gemma): These models provide complete transparency. You can examine their architecture, customize them deeply, and run them on your own infrastructure, ensuring data sovereignty. This path eliminates licensing fees and fosters innovation, but it demands significant in-house expertise in MLOps, infrastructure management, and model maintenance. You are responsible for everything.

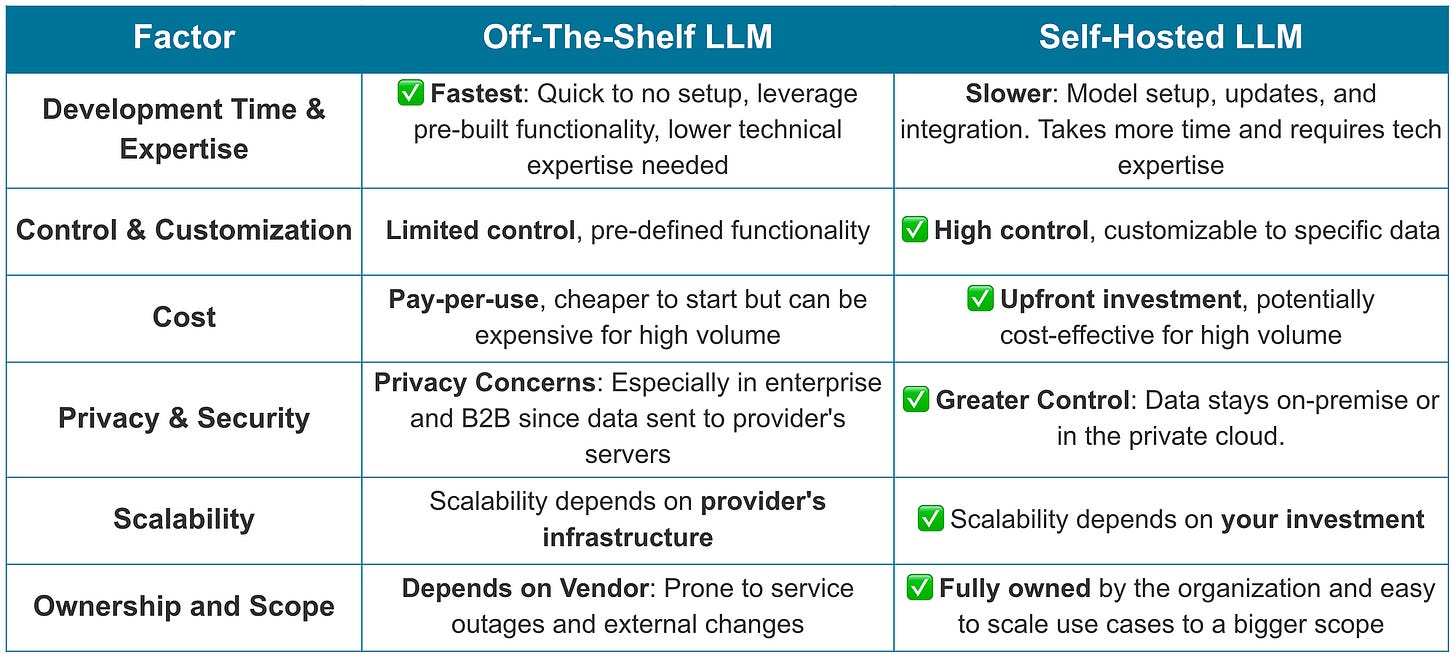

Off-the-Shelf (Managed) vs. Self-Hosted: Your Deployment Philosophy

This decision determines where your AI solution will live and who will be responsible for keeping it running.

Off-the-Shelf (SaaS/API): Using a managed API is the fastest way to get started. The vendor handles uptime, scaling, and security, allowing your team to focus on the application layer. This is ideal for rapid prototyping and for teams without deep infrastructure expertise. The trade-off is a loss of control. Your data is sent to a third party, and you are limited by the customization options the vendor provides.

Self-Hosted: Hosting a model on your own infrastructure (on-premises or in your private cloud) provides maximum control over security, data privacy, and customization. This is often essential for companies in regulated industries or for applications requiring deep integration with internal data sources. However, this path carries a heavy burden of initial setup costs, ongoing maintenance, and the need for a dedicated team to manage it all.

Layer 2: Core Efficacy (The ‘What’)

Once your strategic path is set, you can evaluate the actual tool for the job. This layer is about moving past the marketing hype and validating performance against your real-world business needs.

Beyond Benchmarks: Measuring Task-Specific Performance

Public leaderboards are interesting, but they don’t tell you how a model will perform for YOUR specific use case. You must build your own custom evaluation suite.

If you’re building a customer service bot, test its accuracy against your company’s FAQs and its tone against your brand guidelines.

If you’re creating a code-generation tool, evaluate its output against your internal coding standards and codebases.

Consider multilingual requirements. Does the model have the fluency and cultural nuance required to serve your global audience? Generic benchmarks rarely capture this effectively.

The Data Dilemma: Integrity, Bias and Provenance

The principle of “garbage in, garbage out” has never been more relevant. A model is a reflection of its training data. You must scrutinize the source, quality, and potential biases of any model you consider. If you plan to fine-tune a model, the quality of your own data is paramount. This involves rigorous data cleaning, labeling, and a continuous process to mitigate biases that could harm your customers or your brand.

Layer 3: Operational Readiness (The ‘Reality’)

This is the final gate. A model can be strategically aligned and perform well in a lab, but if it’s not practical for production, it’s useless.

The True Price Tag: Total Cost of Ownership (TCO)

Look beyond the sticker price. A comprehensive financial analysis must include:

Licensing or API call costs.

Infrastructure costs: GPU hours for hosting, fine-tuning, and inference.

Data storage and processing costs.

Personnel costs: The salaries of the engineers and data scientists needed to build, integrate, and maintain the solution.

Performance Under Pressure: Scalability and Latency

Here, we distinguish technical performance from task performance. A model might be highly accurate (task performance), but too slow (technical performance) for a real-time application. You must define your requirements for latency and throughput and ensure your chosen architecture can scale to meet demand without costs spiraling out of control.

The Final Hurdle: Security, Control, and Integration

Finally, the solution must pass your enterprise-grade checks. This includes rigorous security reviews, ensuring compliance with regulations like GDPR or HIPAA, and confirming that the model can be integrated smoothly into your existing MLOps pipelines and CI/CD workflows. This “last mile” of integration is where many promising AI projects falter.

Conclusion: From Model Selection to Strategic Capability

Choosing an AI solution is a formidable task, but it’s manageable when you replace a simple checklist with a robust strategic framework. By thinking in layers, from the high-level Strategic Foundation through the detailed Core Efficacy analysis to the practical Operational Readiness, you shift the conversation.

You move from asking “Which model is best?” to asking “Which strategy will create the most secure, sustainable, and valuable capability for our business?” And that is a question that leads to truly transformative results.

Source

Generative AI: From Proof-of-Concept to Production Series

Article 1: Understanding Generative AI: Capabilities and Potential

Article 2: The AI Decision Framework: The Guide to Choosing the Right AI Strategy

This series takes a deep dive into the journey of implementing Generative AI, from initial conceptualization to full-scale production, using real-world examples. The articles explore what Generative AI is, how it works, its vast capabilities, and how organizations are leveraging it to unlock new value.

About Maher Hanafi

Maher is an executive leader with a proven track record of transforming engineering teams, driving cloud migrations, and delivering impactful SaaS solutions across diverse industries. His passion for technology has recently been ignited by the emergence of AI. Its transformative power to learn, adapt, and solve real-world problems has inspired him to champion its responsible development and explore its vast potential to empower people. He thrives on creating environments where engineers feel valued, challenged, and equipped to excel. Recognized as an Engineering Fellow for notable and significant contributions to the engineering teams and culture, Maher is an entrepreneur, founder in technology and digital marketing, advisor, mentor, and public speaker.